DUBAI: Short-form video app TikTok has released the findings of a report specially commissioned to help better understand young people’s engagement with potentially harmful challenges and hoaxes — pranks or scams created to frighten someone — in a bid to strengthen safety on the platform.

In a statement, the company said that its social networking service had been designed to “advance joy, connection, and inspiration,” but added that fostering an environment where creative expression thrived required that it also prioritized safety for the online community, especially its younger members.

With this in mind, TikTok hired independent safeguarding agency Praesidio Safeguarding to carry out a global survey of more than 10,000 people.

The firm also convened a panel of 12 youth safety experts from around the world to review and provide input into the report, and partnered with Dr. Richard Graham, a clinical child psychiatrist specializing in healthy adolescent development, and Dr. Gretchen Brion-Meisels, a behavioral scientist focused on risk prevention in adolescence, to advise it and contribute to the study.

The report found that there was a high level of exposure to online challenges and teenagers were quite likely to come across all kinds of online changes in their day-to-day lives.

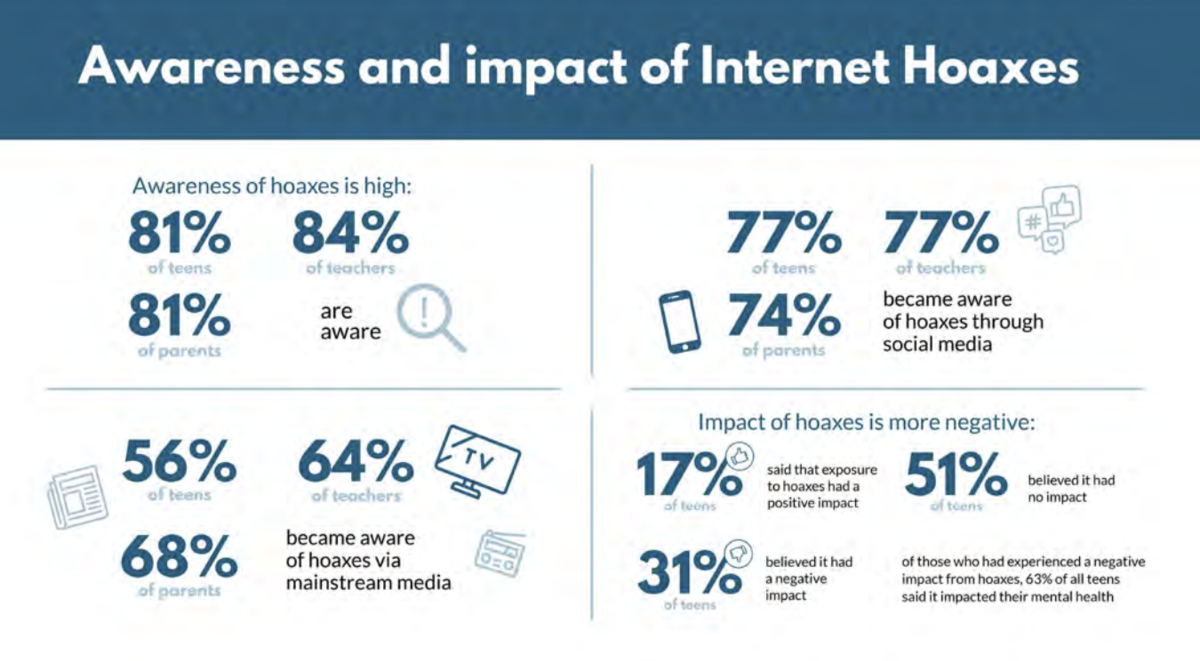

Social media was seen to play the biggest role in generating awareness of these challenges, but the influence of traditional media was also significant.

When teens were asked to describe a recent online challenge, 48 percent were considered to be safe, 32 percent included some risk but were still regarded as safe, 14 percent were viewed as risky and dangerous, and 3 percent were described as very dangerous. Only 0.3 percent of the teenagers quizzed said they had taken part in a challenge they thought was really dangerous.

Meanwhile, 46 percent said they wanted “good information on risks more widely” along with “information on what is too far.” Receiving good information on risks was also ranked as a top preventative strategy by parents (43 percent) and teachers (42 percent).

Earlier this year, the AFP reported that a Pakistani teenager died while pretending to kill himself as his friends recorded a TikTok video. In January, another Pakistani teenager was killed after being hit by a train, and last year, a security guard died while playing with his rifle while making a clip.

Such videos were categorized in the report as “suicide and self-harm hoaxes” where the intention had been to show something fake and trick people into believing that it was true.

Not only could challenges go horribly wrong, as evidenced by the Pakistan cases, but they could also spread fear and panic among viewers. Internet hoaxes were shown to have had a negative impact on 31 percent of teens, and of those, 63 percent said it was their mental health that had been affected.

Based on the findings of the report, TikTok was strengthening protection efforts on the platform by removing warning videos. The research indicated that warnings about self-harm hoaxes could impact the well-being of young people, as they often treated the hoax as real. As a result, the company planned to remove alarmist warnings while allowing conversation that dispelled panic and promoted accurate information.

Despite already having safety policies in place the firm was now working to expand enforcement measures. The platform has created technology that alerts safety teams to sudden increases in violating content linked to hashtags and has now expanded it to capture potentially dangerous behavior.

TikTok also intends to build on its Safety Center by providing new resources such as those dedicated to online challenges and hoaxes and improving its warning labels to redirect users to the right resources when they search for content related to harmful challenges or hoaxes.

The company said the report was the first step in making “a thoughtful contribution to the safety and safeguarding of families online,” adding that it would “continue to explore and implement additional measures on behalf of the community.”