DUBAI: It was way back in the late 1980s that I first encountered the expressions “artificial intelligence,” “pattern recognition” and “image processing.” I was completing the final semester of my undergrad college studies, while also writing up my last story for the campus magazine of the Indian Institute of Technology at Kharagpur.

Never having come across these technical terms during the four years I majored in instrumentation engineering, I was surprised to discover that the smartest professors and the brightest postgrad students of the electronics and computer science and engineering departments of my own college were neck-deep in research and development work involving AI technologies. All while I was blissfully preoccupied with the latest Madonna and Billy Joel music videos and Time magazine stories about glasnost and perestroika.

Now that the genie is out, the question is whether or not Big Tech is willing or even able to address the issues raised by the runaway growth of AI. (Supplied)

More than three decades on, William Faulkner’s oft-quoted saying, “the past is never dead. It is not even past,” rings resoundingly true to me, albeit for reasons more mundane than sublime. Terms I seldom bumped into as a newspaperman and editor since leaving campus — “artificial intelligence,” “machine learning” and “robotics” — have sneaked back into my life, this time not as semantic curiosities but as man-made creations for good or ill, with the power to make me redundant.

Indeed, an entire cottage industry that did not exist just six months ago has sprung up to both feed and whet a ravenous global public appetite for information on, and insights into, ChatGPT and other AI-powered web tools.

Teachers are seen behind a laptop during a workshop on ChatGpt bot organized by the School Media Service (SEM) of the Public education of the Swiss canton of Geneva on February 1, 2023. (AFP)

The initial questions about what kind of jobs would be created and how many professions would be affected, have given way to far more profound discussions. Can conventional religions survive the challenges that will spring from artificial intelligence in due course? Will humans ever need to wrack their brains to write fiction, compose music or paint masterpieces? How long will it take before a definitive cure for cancer is found? Can public services and government functions be performed by vastly more efficient and cheaper chatbots in the future?

Even until October last year, few of us employed outside of the arcane world of AI could have anticipated an explosion of existential questions of this magnitude in our lifetime. The speed with which they have moved from the fringes of public discourse to center stage is at once a reflection of the severely disruptive nature of the developments and their potentially unsettling impact on the future of civilization. Like it or not, we are all engineers and philosophers now.

Attendees watch a demonstration on artificial intelligence during the LEAP Conference in Riyadh last February. (Supplied)

By most accounts, as yet no jobs have been eliminated and no collapse of the post-Impressionist art market has occurred as a result of the adoption of AI-powered web tools, but if the past (as well as Ernest Hemingway’s famous phrase) is any guide, change will happen at first “gradually, then suddenly.”

In any event, the world of work has been evolving almost imperceptibly but steadily since automation disrupted the settled rhythms of manufacturing and service industries that were essentially byproducts of the First Industrial Revolution.

For people of my age group, a visit to a bank today bears little resemblance to one undertaken in the 1980s and 1990s, when withdrawing cash meant standing in an orderly line first for a metal token, then waiting patiently in a different queue to receive a wad of hand-counted currency notes, each process involving the signing of multiple counterfoils and the spending of precious hours.

For people of my age group, a visit to a bank today bears little resemblance to one undertaken in the 1980s and 1990s, when withdrawing cash meant standing in an orderly line first for a metal token, then waiting patiently in a different queue to receive a wad of hand-counted currency notes, each process involving the signing of multiple counterfoils and the spending of precious hours.

Although the level of efficiency likely varied from country to country, the workflow required to dispense cash to bank customers before the advent of automated teller machines was more or less the same.

Similarly, a visit to a supermarket in any modern city these days feels rather different from the experience of the late 1990s. The row upon row of checkout staff have all but disappeared, leaving behind a lean-and-mean mix with the balance tilted decidedly in favor of self-service lanes equipped with bar-code scanners, contactless credit-card readers and thermal receipt printers.

Whatever one may call these endangered jobs in retrospect, minimum-wage drudgery or decent livelihood, society seems to have accepted that there is no turning the clock back on technological advances whose benefits outweigh the costs, at least from the point of view of business owners and shareholders of banks and supermarket chains.

Likewise, with the rise of generative AI (GenAI) a new world order (or disorder) is bound to emerge, perhaps sooner rather than later, but of what kind, only time will tell.

Just 4 months since ChatGPT was launched, Open AI's conversational chat bot is now facing at least two complaints before a regulatory body in France on the use of personal data. (AFP)

In theory, ChatGPT could tell too. To this end, many a publication, including Arab News, has carried interviews with the chatbot, hoping to get the truth from the machine’s mouth, so to say, instead of relying on the thoughts and prescience of mere humans.

But the trouble with ChatGPT is that the answers it punches out depend on the “prompts” or questions it is asked. The answers will also vary with every update of its training data and the lessons it draws from these data sets’ internal patterns and relationships. Put simply, what ChatGPT or GPT-4 says about its destructive powers today is unlikely to remain unchanged a few months from now.

Meanwhile, tantalizing though the tidbits have been, the occasional interview with the CEO of OpenAI, Sam Altman, or the CEO of Google, Sundar Pichai, has shed little light on the ramifications of rapid GenAI advances for humanity.

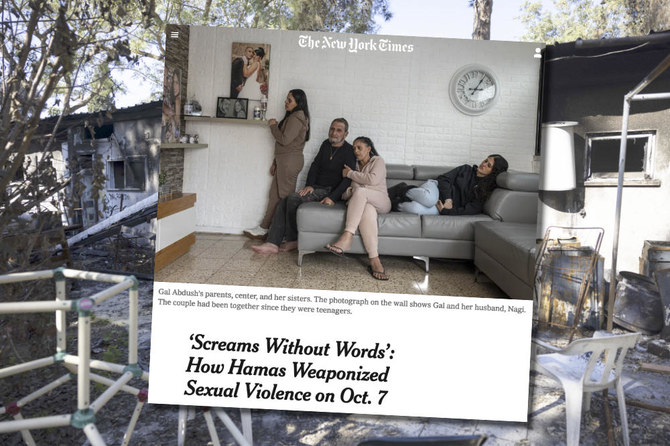

OpenAI CEO Sam Altman, left, and Microsoft CEO Satya Nadella. (AFP)

With multibillion-dollar investments at stake and competition for market share intensifying between Silicon Valley companies, these chief executives, as also Microsoft CEO Satya Nadella, can hardly be expected to objectively answer the many burning questions, starting with whether Big Tech ought to declare “a complete global moratorium on the development of AI.”

Unfortunately for a large swathe of humanity, the great debates of the day, featuring polymaths who can talk without fear or favor about a huge range of intellectual and political trends, are raging mostly out of reach behind strict paywalls of publications such as Bloomberg, Wall Street Journal, Financial Times, and Time.

An essay by Niall Ferguson, the pre-eminent historian of the ideas that define our time, published in Bloomberg on April 9, offers a peek into the deepest worries of philosophers and futurists, implying that the fears of large-scale job displacements and social upheavals are nothing compared to the extreme risks posed by galloping AI advancements.

“Most AI does things that offer benefits not threats to humanity … The debate we are having today is about a particular branch of AI: the large language models (LLMs) produced by organizations such as OpenAI, notably ChatGPT and its more powerful successor GPT-4,” Ferguson wrote before going on to unpack the downsides.

In sum, he said: “The more I read about GPT-4, the more I think we are talking here not about artificial intelligence … but inhuman intelligence, which we have designed and trained to sound convincingly like us. … How might AI off us? Not by producing (Arnold) Schwarzenegger-like killer androids (of the 1984 film “The Terminator”), but merely by using its power to mimic us in order to drive us insane and collectively into civil war.”

Intellectually ready or not, behemoths such as Microsoft, Google and Meta, together with not-so-well-known startups like Adept AI Labs, Anthropic, Cohere and Stable Diffusion API, have had greatness thrust upon them by virtue of having developed their own LLMs with the aid of advances in computational power and mathematical techniques that have made it possible to train AI on ever larger data sets than before.

Just like in Hindu mythology, where Shiva, as the Lord of Dance Nataraja, takes on the persona of a creator, protector and destroyer, in the real world tech giants and startups (answerable primarily to profit-seeking shareholders and venture capitalists) find themselves playing what many regard as the combined role of creator, protector and potential destroyer of human civilization.

Microsoft is the “exclusive” provider of cloud computing services to OpenAI, the developer of ChatGPT. (AFP file)

While it does seem that a science-fiction future is closer than ever before, no technology exists as of now to turn back time to 1992 and enable me to switch from instrumentation engineering to computer science instead of a vulnerable occupation like journalism. Jokes aside, it would be disingenuous of me to claim that I have not been pondering the “what-if” scenarios of late.

Not because I am terrified of being replaced by an AI-powered chatbot in the near future and compelled to sign up for retraining as a food-delivery driver. Journalists are certainly better psychologically prepared for such a drastic reversal of fortune than the bankers and property owners in Thailand who overnight had to learn to sell food on the footpaths of Bangkok to make a living in the aftermath of the 1997 Asian financial crisis.

The regret I have is more philosophical than material: We are living in a time when engineers who had been slogging away for years in the forgotten groves of academe and industry, pushing the boundaries of AI and machine learning one autocorrect code at a time, are finally getting their due as the true masters of the universe. It would have felt good to be one of them, no matter how relatively insignificant one’s individual contribution.

There is a vicarious thrill, though, in tracking the achievements of a man by the name of P. Sundarajan, who won admission to my alma mater to study metallurgical engineering one year after I graduated.

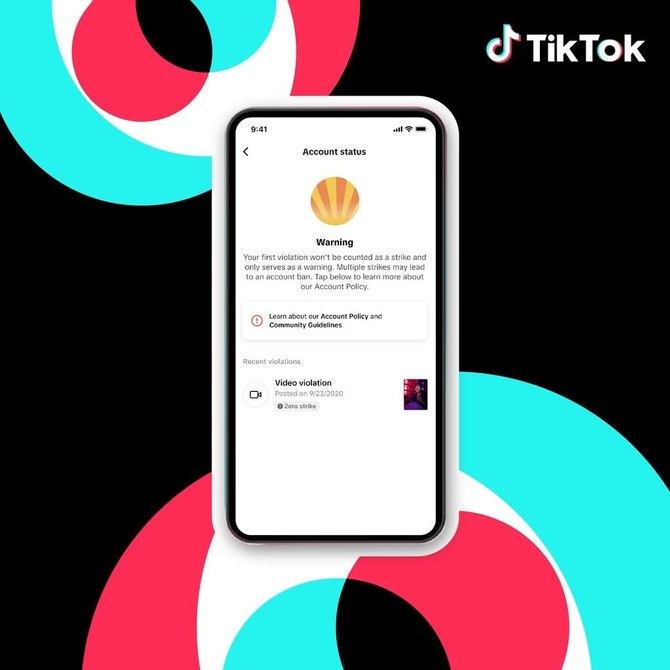

Google Inc. CEO Sundar Pichai (C) is applauded as he arrives to address students during a forum at The Indian Institute of Technology in Kharagpur, India, on January 5, 2017. (AFP file)

Now 50 years old, he has a big responsibility in shaping the GenAI landscape, although he probably had no inkling of what fate had in store for him when he was focused on his electronic materials project in the final year of his undergrad studies. That person is none other than Sundar Pichai, whose path to the office of Google CEO went via IIT Kharagpur, Stanford University and Wharton business school.

Now, just as in the final semester of my engineering studies, I have no illusions about the exceptionally high IQ required to be even a writer of code for sophisticated computer programs. In an age of increasing specialization, “horses for courses” is not only a rational approach, it is practically the only game in town.

I am perfectly content with the knowledge that in the pre-digital 1980s, well before the internet as we know it had even been created, I had got a glimpse of the distant exciting future while reporting on “artificial intelligence,” “pattern recognition” and “image processing.” Only now do I fully appreciate how great a privilege it was.